Imagine a world where your secrets truly belong to you—where communication is guarded so securely that even the most skilled hacker can’t break through. This is the promise of Quantum Key Distribution (QKD), a technology that uses the laws of quantum physics to offer unmatched security. It’s like having a private conversation shielded from prying eyes, stretching across vast distances, and fortified by what we know about the universe.

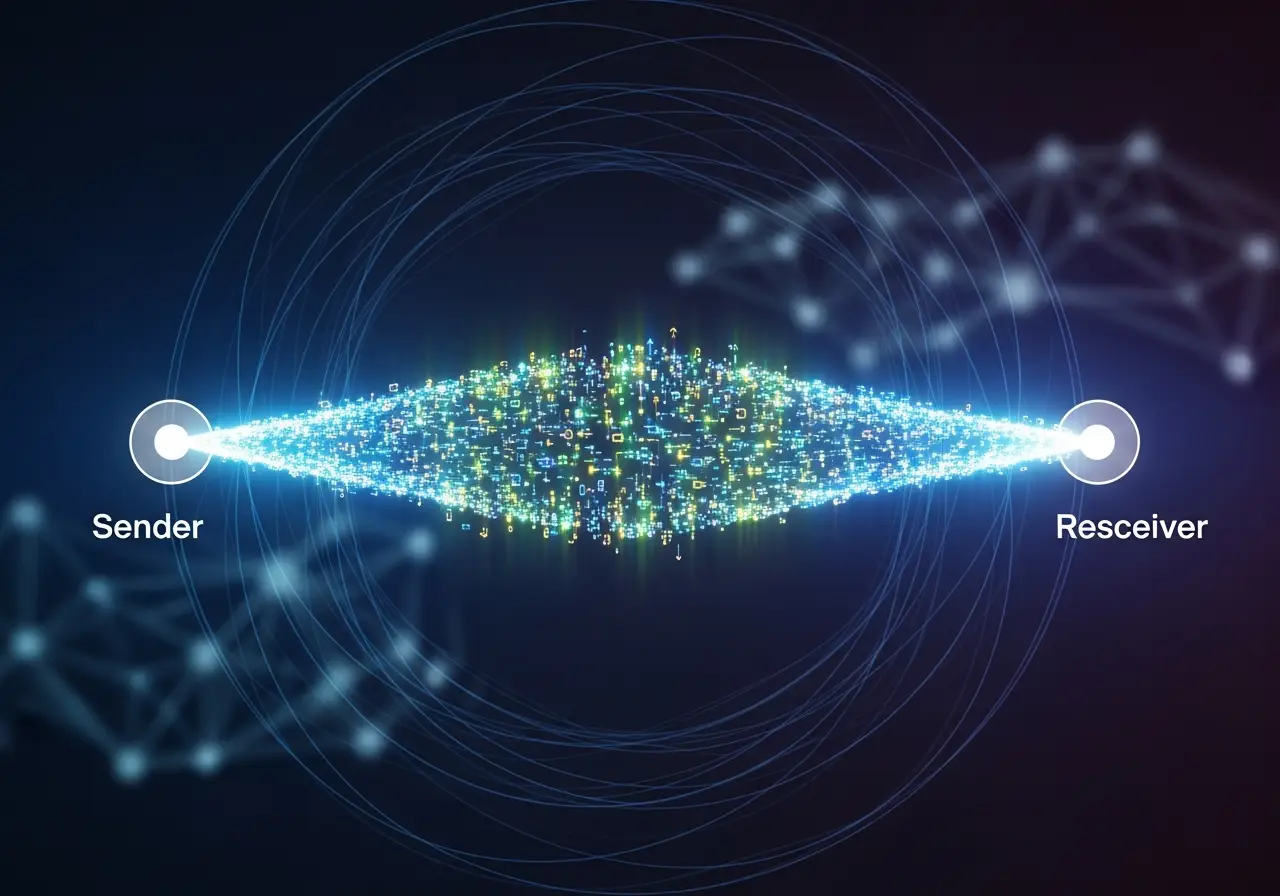

In today’s digital landscape, where threats are all too common, the idea of security can feel as slippery as holding sand. Enter QKD, offering a solution through clever manipulation of tiny particles called photons. These photons carry encryption keys, and if anyone tries to eavesdrop, the very act of observation leaves traces, alerting users to the breach. It’s a simple yet powerful concept: security so tight that any intrusion attempt is automatically detected.

At its core, QKD revolves around quantum states and coherence—two particles existing in connection, maintaining integrity even over distance. Think of it like two close friends at a concert, understanding each other’s moods and movements without needing words. If someone tries to interrupt this group, the interference is obvious.

In practice, QKD could revolutionize secure communication. Imagine banking transactions, government exchanges, or healthcare data protected at a fundamental level, out of reach of malicious intent. But introducing this technology isn’t without its challenges—the quirks of quantum behavior and the technical hurdles involved in setting up such systems.

As we stand on the brink of this quantum leap, the potential applications are huge. Yet, are we ready to embrace this change, to step into a future where digital security breaches become tales of the past? Integrating QKD not only brings protection but also marks a shift in how we think about privacy—a chance to take control of our information in a way that was previously unimaginable.

This isn’t just adopting a new technology; it’s a decisive move toward safer communication. As we face the future, the chance to secure our secrets and shape our destinies in a more private digital world lies ahead. Are you prepared to join this journey?