What if you could see reality not as a static stage, but as a dynamic dance shaped by your very presence? Welcome to the intriguing world of Relational Quantum Mechanics, where each thought, glance, and intention adds color to the cosmic tableau. It’s a universe where quantum states flourish through connection, revealing mysteries only in the interplay between observers. This journey invites you to uncover a reality where your consciousness crafts the narrative, each experience adding a thread to the intricate tapestry of existence. Ready to explore the relationships that define our quantum world?

In this cosmic choreography, reality shifts with the observer’s influence. Think of each particle, each quark, not as mere numbers but as performers reacting to your gaze—much like actors on a grand stage. In Relational Quantum Mechanics, your consciousness acts as both a spotlight and a conductor, influencing how particles relate. The universe doesn’t follow a linear script; it pulses with life, waiting for you to observe and shape its form. It’s not about where you are, but how you perceive your surroundings.

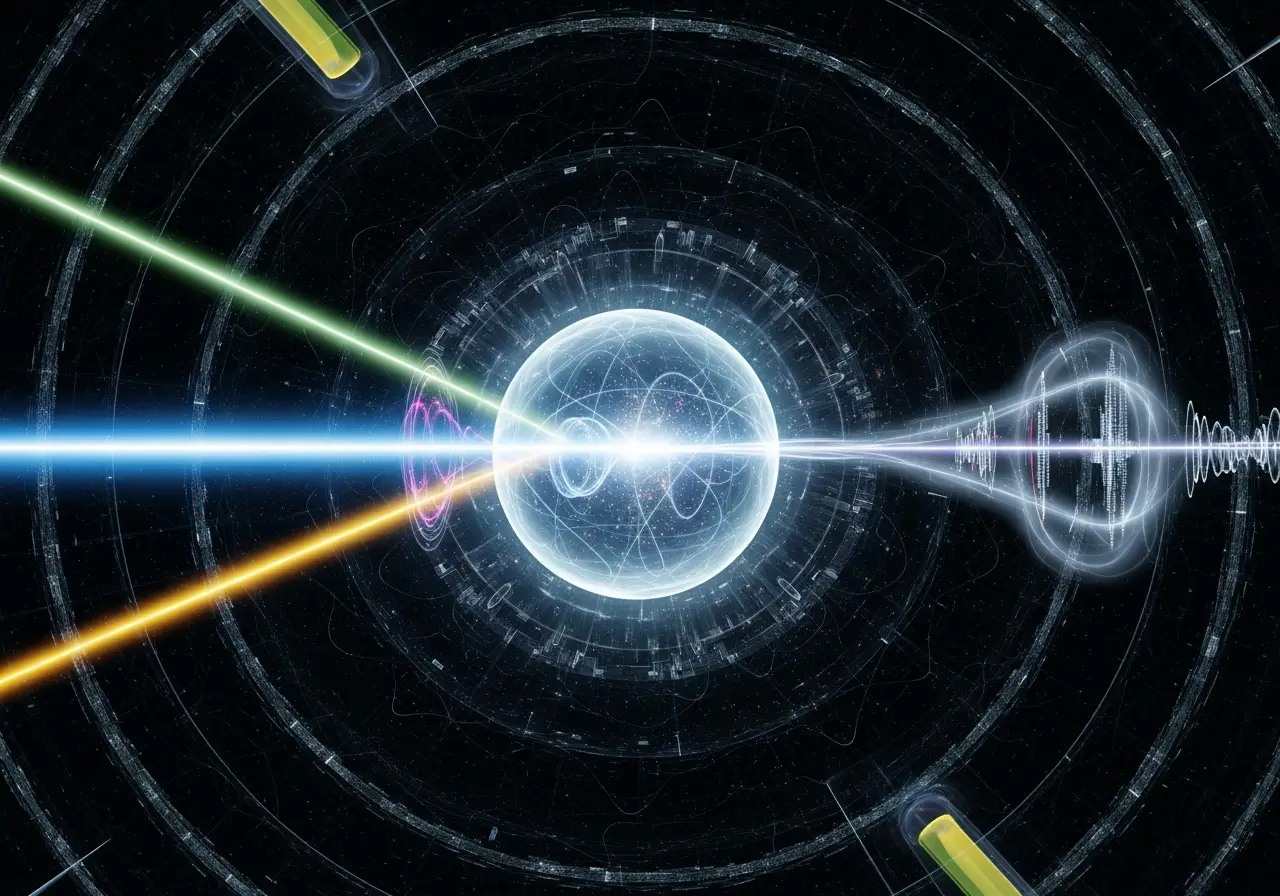

Imagine you’re at a lively party, where every move conjures new possibilities. At the heart of Relational Quantum Mechanics is the idea that quantum states are relational—gaining significance only through observer interaction. Visualize yourself in the midst of this vibrant gathering, where every decision transforms the tempo of the dance. Observing a particle is like switching on a light, revealing a path once hidden. Each observation ripples through the cosmic pond, showcasing the interconnected nature of all things.

Now, let’s delve even deeper. In Relational Quantum Mechanics, context is all-important. Picture two observers looking at the same electron, yet each experiences a distinct reality shaped by their backgrounds and perceptions. It’s like two people on either side of a mirror: the reflection is shared, but their perspectives are unique. This concept opens a door to a beautifully fluid reality where nothing is truly fixed. The cosmos playfully reminds us that stability is just another illusion.

This merging of physics and philosophy raises profound questions. If reality takes shape through interactions, what does that mean for truth? Relational Quantum Mechanics challenges the notion of absolute truth, suggesting it’s a multi-dimensional gem, varied with every observer’s viewpoint. Each experience adds a layer to the mosaic of existence, blurring lines between objective facts and subjective interpretations. It’s enough to make your head spin faster than a quark!

Don’t overlook coherence, the graceful movement emerging from every interaction. Imagine coherence as your dance partner, guiding you across reality’s floor. Align your thoughts, emotions, and actions to sway in harmony, creating the reality you wish to experience. Miss a step, and you might find yourself in chaos. Embrace this dance consciously, becoming both a participant and creator, weaving new patterns in the quantum fabric.

On a broader level, Relational Quantum Mechanics reshapes our view of the universe. What if, instead of seeing ourselves as detached observers, we embraced our roles as interconnected parts of the cosmic design? This narrative shift could profoundly affect our collective perspective, reminding us that we share this dance with the cosmos. Each vibration influences the whole, creating ripples of change through the vast quantum ocean.

Relational Quantum Mechanics encourages us to move beyond the confines of rigid classification. It invites us into a community where every thought and intention matters. Recognizing that existence thrives on relationships—between particles, minds, and hearts—empowers us to be active agents of change, shifting the balance of the universe.

As we step away from well-charted quantum paths and observer-based realities, it’s crucial to remember that Relational Quantum Mechanics extends beyond theoretical physics. It invites each of us to participate in crafting our narrative, where consciousness doesn’t just witness the universe but helps shape it. So, fellow cosmic dancers, tune into your surroundings and harmonize your minds; let’s co-create the vibrant choreography of our world. Ready to explore this fascinating dance? The universe eagerly awaits your participation.