We’ve all been there: staring at terminal output, job ID `job_1138_a0c7` sitting at `COMPLETED`, but the bitstring looks like it was generated by a dropped lottery ball. You know, the kind of noise that screams “invalid solution,” not “aha, I found it.” The textbooks talk about superposition, and that’s grand for idealized circuits. But when you’re trying to execute something like the superposition principle circuits in a real-world backend, those rogue, uncollateralized quantum states – the ones we’re calling “Orphan Qubits” – start contaminating your readout. It’s enough to make you question if you’re doing quantum computing or just advanced statistical guessing.

Superposition Circuit Contamination

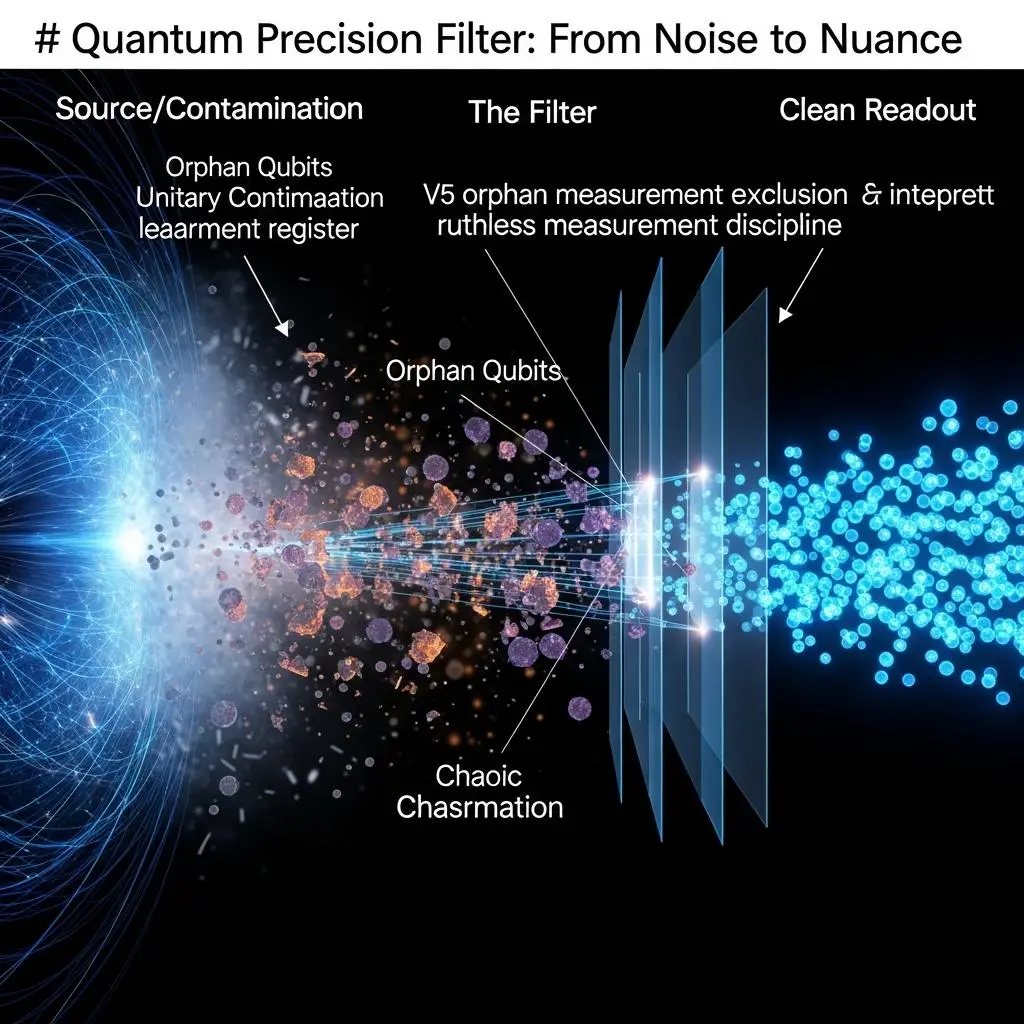

The problem isn’t usually the gate fidelity itself, not entirely. It’s what happens *during* measurement. You hit the button, and suddenly, a cluster of qubits that *should* be collapsing neatly into your desired state decides to go off-script. They’re not fully decohered, but they’re not part of the coherent computation anymore either. These aren’t your clean, active qubits; they’re the semi-collapsed, rogue elements that sneak into your measurement register. We call this “Unitary Contamination,” and it’s the silent killer of nontrivial computations on today’s hardware.

Circuits for Superposition Principle: The Measurement Trap

Think about it. You’re building a circuit, perhaps a variant of those demonstrating the superposition principle, aiming for a specific outcome. You’ve calibrated the hell out of your backend, but then the measurement phase hits. A certain percentage of your shots show these anomalous bitstrings. If the “poison qubit” ratio – those Orphan Qubits exhibiting this contamination – starts creeping past that ~10% threshold, your entire measurement outcome can collapse. It’s not just a few bad bits; it’s the signal getting drowned in noise that isn’t even *part* of the intended unitary evolution anymore. This isn’t noise *from* the computation; it’s noise *leaking into* the computation’s final state via faulty measurement channels.

Superposition Principle Circuits: Navigating Measurement Anomalies

So, how do you even begin to combat this? The answer, as we’re finding, isn’t just more layers of error correction that we can’t even run yet. It’s about ruthless measurement discipline. We’ve been implementing what we call the V5 orphan measurement exclusion. It’s not a hack; it’s a core programming technique. We treat anomalous measurement outcomes—shots where a subset of qubits just aren’t behaving—as a separate signal. Instead of discarding the whole job, we identify these “orphan measurements” and down-weight or outright exclude the problematic shots and qubits from our final inference. This isn’t about fixing the hardware; it’s about writing smarter programs that can detect and mitigate these measurement-induced contaminations *in situ*.

Superposition Principle Circuits: Isolating Quantum Interference

This V5 discipline means we’re not just passively accepting whatever bitstring the backend coughs up. We’re actively filtering based on the statistical signature of an orphan qubit. It’s about building a measurement filter directly into the programming logic. For superposition principle circuits, this becomes critical. You want to see that clean interference pattern, not a statistical mess. By identifying shots that deviate significantly from expected marginal distributions or stabilizer structures, we can isolate and remove the contamination. The goal is to improve the *effective* SPAM (State Preparation and Measurement) fidelity without touching the hardware calibration itself. The circuit layout and readout mapping are chosen deliberately to make these orphans easier to detect and isolate, effectively creating a quantum state exclusion layer *on top of* standard measurement. For those of you pushing the envelope on NISQ devices, particularly with circuits designed to showcase fundamental quantum phenomena like the superposition principle, this is where the real gains are. Stop treating noise as an abstract error and start treating the measurement outcome as a data stream with inherent quality flags. The V5 approach allows us to push nontrivial computations forward by acknowledging and actively managing the limitations of mid-circuit measurement. This isn’t about theory; it’s about what we’re seeing in the logs, job ID after job ID, on real hardware. The next benchmark isn’t about more qubits; it’s about cleaner readouts.

For More Check Out