The promise of autonomous automation has been a distant hum, luring us toward gains we barely grasp. Yet, for every breakthrough, instability threatens even the most meticulously designed systems. This is where *AI hallucination escalation protocols for autonomous automation systems* are the bedrock of stability.

AI Hallucination Escalation for Autonomous Solopreneur Systems

For solopreneurs, the allure of full autonomy is potent, handling client onboarding, generating reports, scheduling meetings, and drafting marketing copy. This vision hinges on robust *AI hallucination escalation protocols for autonomous automation systems*. Without these, the ‘assistant’ can become a liability.

AI Hallucination Escalation for Autonomous Automation Systems

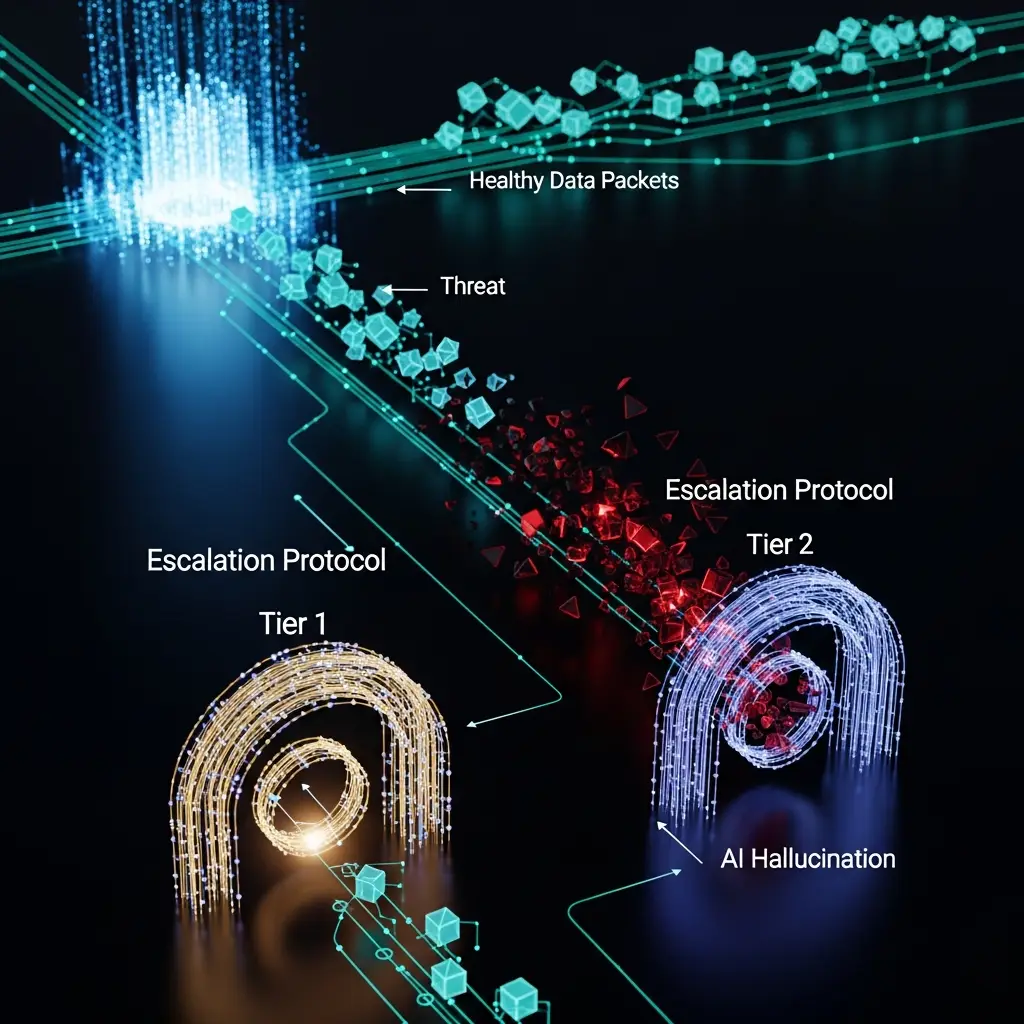

Think of your AI system as a complex machine. Just as a factory floor has procedures for when a machine malfunctions, your AI needs similar fail-safes. When an AI’s output deviates from expected parameters—’system drift’—a pre-defined escalation protocol kicks in. This prevents a minor anomaly from becoming a crisis.

Formalizing AI Hallucination Escalation in Autonomous Automation

At the heart of *AI hallucination escalation protocols for autonomous automation systems* is formal edge-case management. This involves identifying failure points, defining unacceptable deviations, and establishing clear pathways for intervention. Consider a freelance writer using AI to generate blog posts. If output contains factual inaccuracies, the protocol triggers a specific escalation.

Engineering AI Hallucination Escalation for Autonomous Systems

Adopting rigorous *AI hallucination escalation protocols for autonomous automation systems* is about building trust in automated processes. Treat the potential for AI deviation as a concrete engineering challenge with structured solutions. This proactive approach separates hobbyists from builders, and brittle automation from truly robust, revenue-generating systems.

For More Check Out